Github repositories archived

Due to inactivity, most of the repositories on Github have been archived.

Due to inactivity, most of the repositories on Github have been archived.

A new release of wai.annotations is out now: 0.8.0

This release contains two major updates:

wai.annotations.coqui module added for processing Coqui AI STT and TTS datasets

a bug-fix that applies to the dataset splitting (--split-names/--split-ratios) breaks backwards compatibility;

the new --no-interleaving flag enables the old behavior again

Here is a detailed overview of all the changes since the 0.7.8 release:

wai.annotations.tf is now optional

install.sh now has -o flag to install optional modules when installing latest (-l)

Upgraded wai.annotations.commonvoice to 1.0.2

the expected header now uses accents rather than accent (but the reader accepts both)

Upgraded wai.annotations.core to 0.2.0

FilterLabels ISP now treats elements as negative ones if no labels left after filtering (in order to use discard-negatives in pipeline); also works on image classification domain now as well

FilterLabels ISP can filter out located objects that don't fall within a certain region (x,y,w,h - normalized or absolute) using a supplied IoU threshold; useful when concentrating on annotations in the center of an image, e.g., for images generated with the subimages ISP (object detection domain only)

logging._LoggingEnabled module now sets the numba logging level to WARNING

logging._LoggingEnabled module now sets the shapely logging level to WARNING

core.domain.Data class now stores the path of the file as well

Rename ISP allows renaming of files, e.g., for disambiguating across batches

batch_split.Splitter now handles cases when the regexp does not produce any matches (and outputs a warning when in verbose mode)

Added LabelPresent ISP, which skips object detection images that do not have specified labels (or if annotations do not overlap with defined regions; can be inverted).

Using wai.common==0.0.40 now to avoid parse error output when accessing poly_x/poly_y meta-data in LocatedObject instances when containing empty strings.

The CleanTranscript ISP can be used to clean up speech transcripts.

Bug fix for splitting where split-scheduling was calculated with swapped iteration order, leading to runs of splits rather than desired interleaving. Added --no-interleave flag to re-enable bug for backwards compatibility.

Upgraded wai.annotations.imgaug to 1.0.6

sub-images plugin now has a --verbose flag; only initializes the regions now once

Upgraded wai.annotations.layersegments to 1.0.2

FromLayerSegments class now outputs logging message if setting of new annotation indices fails, as error occurs before the wai.annotations - Sourced ... logging message, making it possible to track the image causing the problem.

Added --lenient flag to FromLayerSegments class which allows conversion of non-binary images with just two unique colors to binary ones instead of throwing an error.

Added --invert flag to FromLayerSegments class which allows inverting the colors (b/w <-> w/b) of the binary annotation images.

Upgraded wai.annotations.video to 1.0.2

VideoFileReader source now passes on a file path with the frames as well

Upgraded wai.annotations.yolo to 1.0.1

the read_labels_file method of FromYOLOOD now strips leading/trailing whitespaces from the labels.

Added wai.annotations.coqui 1.0.0

from-coqui-stt-sp: reads speech transcriptions in the Coqui STT CSV-format

from-coqui-tts-sp: reads speech transcriptions in the Coqui TTS text-format

to-coqui-stt-sp: writes speech transcriptions in the Coqui STT CSV-format

to-coqui-tts-sp: writes speech transcriptions in the Coqui TTS text-format

The Oxford Pets dataset has been made available for download from our datasets website:

https://datasets.cms.waikato.ac.nz/ufdl/oxford-pets/

The original dataset has been converted into several different domains:

image classification

image segmentation

instance segmentation

object detection (bbox; head or animal).

A new release of wai.annotations is out now: 0.7.8

This release contains three major updates:

additional domain for audio classification, uses the -ac suffix for domain-specific plugins

wai.annotations.audio module added for processing and augmenting audio data

wai.annotations.generic module makes it easier for plugging in your own Python classes (derived from wai.annotations classes), as you don't have to write a lot of boiler-plate code to integrate it into the framework (the wrappers take care of that!) - check out the manual for examples

Here is a detailed overview of all the changes since the 0.7.7 release:

wai.annotations.core updated to 0.1.8

Added new audio domain for classification using suffix -ac

Added dataset reader for audio files: from-audio-files-sp, from-audio-files-ac

Added dataset writer for audio files: to-audio-files-sp, to-audio-files-ac

Added dummy sink for audio files: to-void-ac

Added ISP for selecting a sub-sample from the stream: sample

wai.annotations.subdir updated to 1.0.1

added reader/writer for audio classification: from-subdir-ac and to-subdir-ac

wai.annotations.audio added for audio support (currently at 1.0.1)

audio-info-ac: sink for collating/outputting information on the audio classification files

audio-info-sp: sink for collating/outputting information on the speech files

convert-to-mono: ISP for converting MP3/OGG/FLAC/WAV to mono WAV

convert-to-wav: ISP for converting MP3/OGG/FLAC to WAV

mel-spectrogram: XDC for generating plot from a mel spectrogram (outputs image classification instance)

mfcc-spectrogram: XDC for generating plots from Mel-frequency cepstral coefficients (outputs image classification instance).

pitch-shift: augmentation ISP for shifting the pitch

resample-audio: ISP for resampling MP3/OGG/FLAC/WAV

stft-spectrogram: XDC for generating plot from a short-time fourier-transform spectrogram (outputs image classification instance)

time-stretch: augmentation ISP for time-stretching audio (speed up/slow down)

trim-audio: ISP for trimming silence from audio

wai.annotations.generic added (currently at 1.0.0)

generic-source-ac: wrapper around a user-supplied source class for audio classification

generic-source-ic: wrapper around a user-supplied source class for image classification

generic-source-is: wrapper around a user-supplied source class for image segmentation

generic-source-od: wrapper around a user-supplied source class for object detection

generic-source-sp: wrapper around a user-supplied sourceclass for speech

generic-isp-ac: wrapper around a user-supplied ISP class for audio classification

generic-isp-ic: wrapper around a user-supplied ISP class for image classification

generic-isp-is: wrapper around a user-supplied ISP class for image segmentation

generic-isp-od: wrapper around a user-supplied ISP class for object detection

generic-isp-sp: wrapper around a user-supplied ISP class for speech

generic-sink-ac: wrapper around a user-supplied sink class for audio classification

generic-sink-ic: wrapper around a user-supplied sink class for image classification

generic-sink-is: wrapper around a user-supplied sink class for image segmentation

generic-sink-od: wrapper around a user-supplied sink class for object detection

generic-sink-sp: wrapper around a user-supplied sinkclass for speech

A new release of wai.annotations is out now: 0.7.7

Here is an overview of all the changes since the 0.7.6 release:

wai.annotations.bluechannel 1.0.2

FromBlueChannel class now outputs logging message if setting of new annotation indices fails, as error occurs before the wai.annotations - Sourced ... logging message, making it possible to track the image causing the problem.

wai.annotations.coco 1.0.2

method located_object_to_annotation (module: wai.annotations.coco.util._located_object_to_annotation) skips invalid polygons now rather than stopping the conversion

wai.annotations.core 0.1.7

Added discard-invalid-images ISP for removing corrupt images or annotations with no image attached.

Added batch-split sub-command for splitting individual batches of annotations into subsets like train/test/val. Supports grouping of files within batches (eg multiple images of the same object).

Added filter-metadata ISP for filtering object detection.

Restricted maximum characters per line in help output to 100 to avoid long help strings to become unreadable.

The polygon-discarder now annotations that either have no polygon or invalid polygons.

Added descriptions to the help screens of the main commands.

The ImageSegmentationAnnotation class now outputs the unique values in its exception when there are more unique values than labels

The Data class (module: wai.annotations.core.domain) now outputs a warning message if a file cannot be read; also added LoggingEnabled mixin.

wai.annotations.grayscale 1.0.1

FromGrayscale class now uses numpy.frombuffer instead of deprecated numpy.fromstring method.

FromGrayscale class now outputs logging message if setting of new annotation indices fails, as error occurs before the wai.annotations - Sourced ... logging message, making it possible to track the image causing the problem.

wai.annotations.imgaug 1.0.5

Added sub-images plugin for extracting regions (including their annotations) based on one or more bounding box definitions from the images coming through and only forwarding these sub-images

wai.annotations.imgstats 1.0.3

label-dist-od now has --label-key option to specify the meta-data key that stores the label

label-dist-XY now sort the labels in alphabetically order before outputting the statistics

added area-histogram-is and area-histogram-od

wai.annotations.imgvis 1.0.3

added combine-annotations-od for combining overlapping annotations between images into single annotation

add-annotation-overlay-is/od now use narg='+' for --labels and --colors options instead of comma-separated single argument

wai.annotations.indexedpng 1.0.1

FromIndexedPNG class now outputs logging message if setting of new annotation indices fails, as error occurs before the wai.annotations - Sourced ... logging message, making it possible to track the image causing the problem.

wai.anntations.redis.predictions 1.0.2

redis-predict-is now supports grayscale predictions as well

wai.annotations.roi 1.0.1

FromROI.convert_roi_object now also adds label, score, minrect_w and minrect_h to the meta-data of a LocatedObject instance.

wai.annotations.video 1.0.1

Fixed error message of DropFrames/SkipSimilarFrames in case data of wrong domain is coming through

added: filter-frames-by-label-od

A new release of wai.annotations is out now: 0.7.6

Since the 0.7.3 release, the following changes occurred:

Added wai.annotations.imgaug for performing image augmentations on the image stream (crop, flip, blur, grayscale, contrast, rotate, scale, )

Added wai.annotations.imgstats for generating label distributions

Added wai.annotations.imgvis for adding annotation overlays, viewing images, combining annotations into single overlay image

Added wai.annotations.opex for reading/writing the OPEX object detection JSON format

Added wai.annotations.redis.predictions for making predictions via a Redis backend (e.g., image classification, object detection, image segmentation)

Added wai.annotations.video for reading frames from video files/webcams, dropping frames, skipping similar frames, writing video files

Added wai.annotations.yolo for reading/writing [YOLO txt files](https://github.com/waikato-ufdl/wai-annotations-yolo/issues/1)

Upgraded wai.annotations.core version, adding ISP for discarding polygons, I/O support for images (with empty annotations)

Upgraded wai.annotations.tf version to support tensorflow 2.7.x

On top of that, the documentation available through ufdl.cms.waikato.ac.nz/wai-annotations-manual has been overhauled and the example section expanded to include examples for some of the new modules/plugins.

From now on, releases will be made available as Docker images under the following :

hub.docker.com/repository/docker/waikatoufdl/wai.annotations

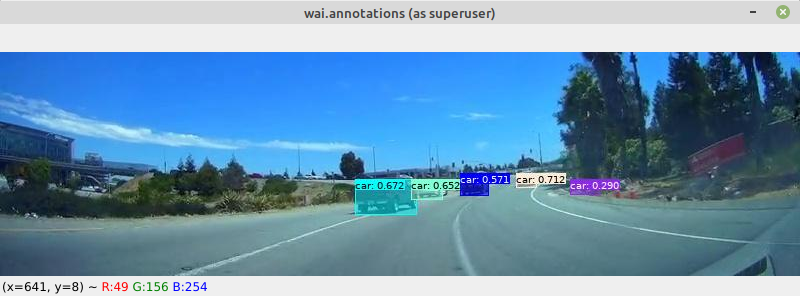

Finally, a working example of using a pretrained Yolov5 network to annotated (and visualize) frames extracted from a dashcam video can be found in the example section.

Datasets used in our publications are being made publicly available on our dedicated website for datasets:

Our publication, Efficiently Correcting Machine Learning: Considering the Role of Example Ordering in Human-in-the-Loop Training of Image Classification Models, has been accepted in the 27th Annual Conference on Intelligent User Interfaces, Helsinki, Finland (March 21-25).

We will add the paper on our publications page, once the proceedings become available.

The newly released wai.annotations.yolo module for the wai.annotations library allows the reading and writing of annotations in a format used by the popular YOLO network architectures.

The wai.annotations release 0.7.4 has this new module as dependency (as well as the wai.annotations.imgaug module released last week).

The newly released wai.annotations.imgaug module for the wai.annotations library offers some basic image augmentation techniques (crop, flip, gaussian-blur, hsl-grayscale, linear-contrast, rotate) which can be applied to the stream of images that are being processed in the pipeline. Of course, any annotations get processed accordingly. Under the hood, the excellent imgaug library is utilized.

At this stage, the module supports the following domains:

Image Classification Domain

Image Object-Detection Domain